Objective

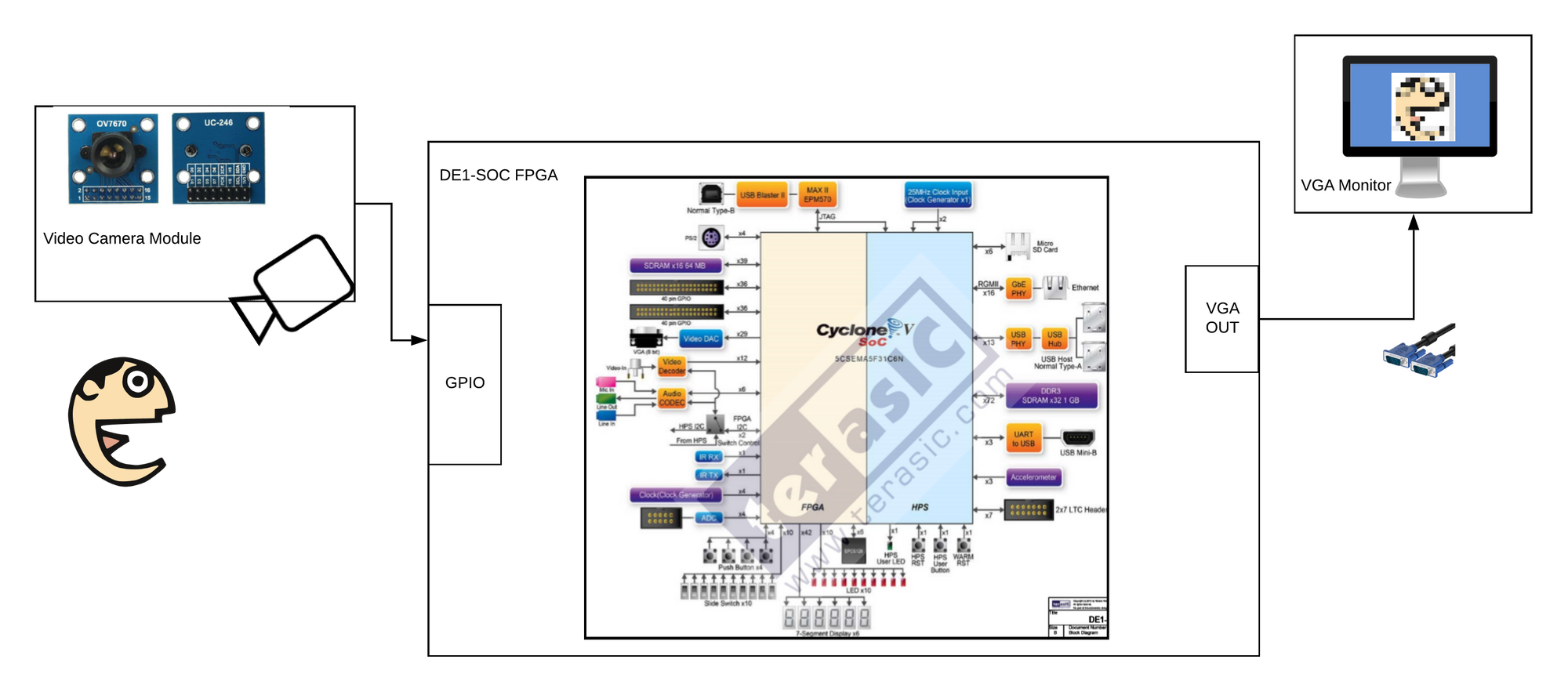

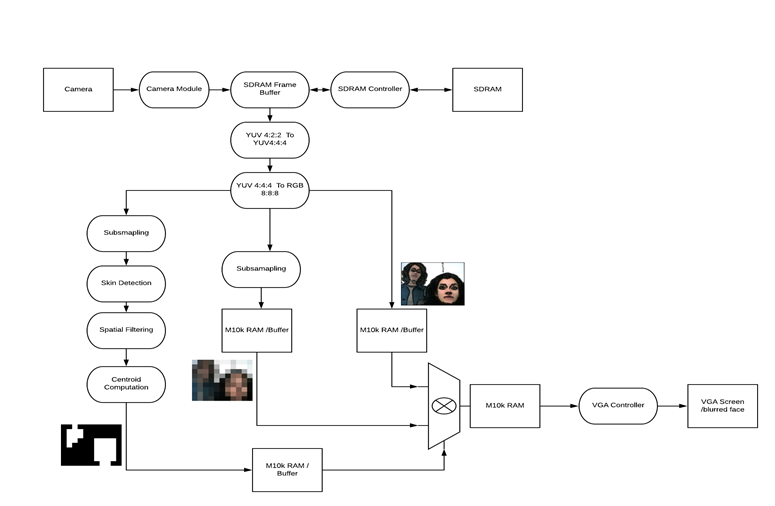

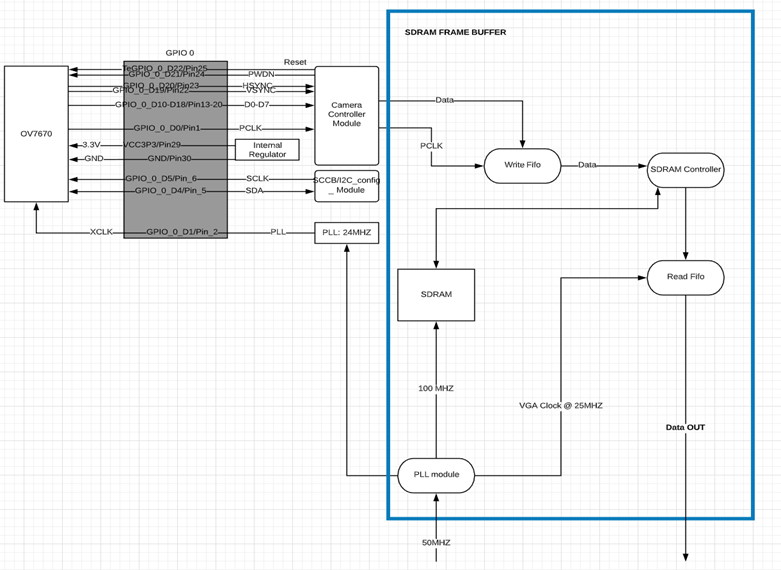

The objective of this project is to build a Realtime face anonymizer which detects features of a face in Realtime and blurs it. This will be done by feeding image frames grabbed from a camera; to and FPGA which will do the facial detection , blurring and output those frames to a monitor. A high level diagram of the design architecture is shown in figure 1.

Background Information

Face Detection Methods

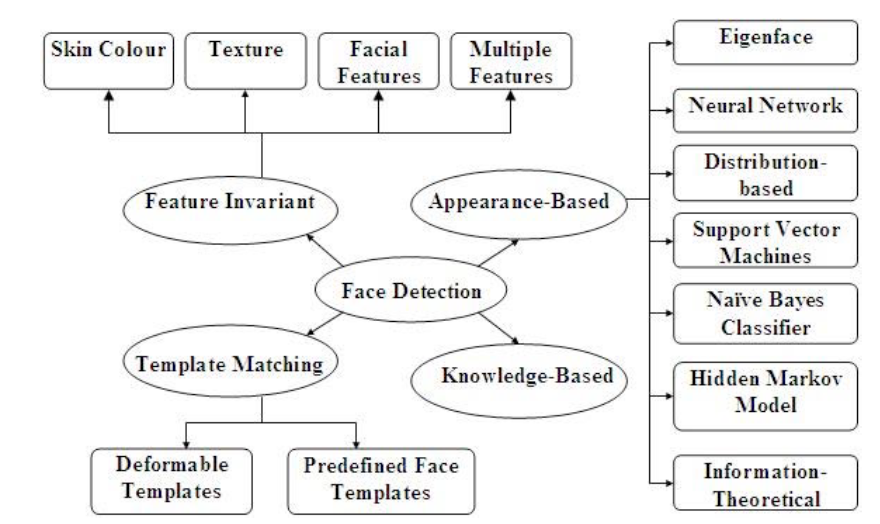

Figure 2 shows a layout of the different types of algorithms for facial detection. There are 4 main types of facial detection methods; Appearance-Based, Knowledge Based, Template Matching and Feature Invariant; with each of these methods having further subdivisions as shown in figure 2. This project will be exploring the feature invariant method of facial detection which is further subdivided into a category called color segmentation. The feature invariant method of color segmentation is chosen for this design because it is the most suitable for a direct hardware implementation such as an FPGA due to low computation requirements.

Color Threshold-Based Skin Detection

Color Spaces

A color space is used to encode, and transfer information received from the image sensor in the camera. There are 2 color spaces which will be referenced in this design which are RGB and the YUV color space.

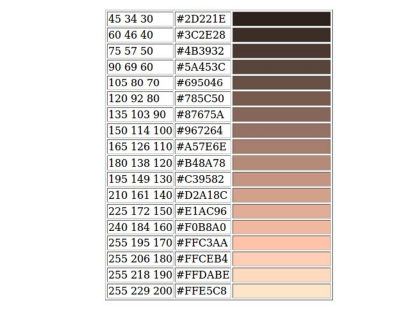

RGB Color Space

The RGB formats are RGB888 , RGB565, RGB555 and RGB444. In an RGB888 format, each pixel is stored in 24 bits, made up of red, green, and blue channels each stored with 8 bits. This means that the intensity of each pixel can go from 0 to 255 (2^8) , where 0 is the absence of light, and 255 is the maximum intensity. In this project we are going to be using the OV7670 camera which can be configured to output RGB565, RGB555, and RGB444 via serial camera control bus (SCCB) with the three numbers following the RGB determining the number of bits. VGA output to the screen for DE1-SOC is RGB888 format.

YUV/YCbCr Color Space

YCbCr sometimes referred to as YUV is another format in which a RGB color can be encoded. The Y or luminance component is the amount of white light of a color, and the Cb and Cr are the chroma components, which respectively encode the blue and red levels relative to the luminance component. The luminance or luma, refers to brightness, and chrominance or chroma, refers to color. Typically, the terms YCbCr (analog) and YUV (digital) are used interchangeably. Y channel encodes the gray scale levels of the image (monochrome image).

YUV formats provided by our camera are YUV444 and YUV422. The first number indicates the number of pixels wide the sample is (usually 4). The second number tells you how many of the pixels in the top row will have color information, that is, chroma samples. And the third number tells you how many pixels in the bottom row will have chroma samples.

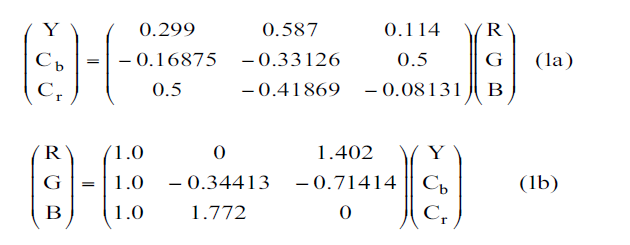

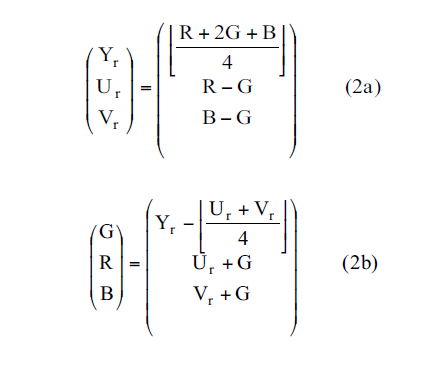

Color Space Transformations

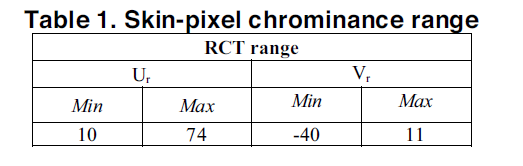

We can convert between RGB and YUV color spaces using the equations 1a, 1b, 2a and 2b. These transformations in (ICT, 1a and 1b) are known as irreversible component transformation while those in (RCT, 2a and 2b) are known as reversible component transformation. From equations Figure 3 eq.1a and eq.1b, it can be seen that the use of ICT would require floating point hardware while RCT (from figure 3 equations 2a and 2b) uses integer-only operations with a total of four additions and one shift operation per color pixel. The RCT would be cheaper and easier to implement in hardware compared to the ICT color space, so we will use the RCT from equation 2a in this design.

Experimental Research

Experimental research presented in [1] , has shown that Human skin tones tend to fall within a certain range of chrominance values enabling use that we can completely ignore the luminance or Y. Further studies have shown that the blue component has the least contribution to skin tone color [1]. If we combine these experimental results, we are left with the simple formula shown in eq . 3.

U = R – G for 10 < U < 74 (3.)

Face Detection Algorithm

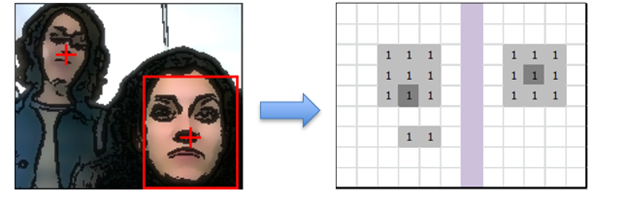

Step 1 : Subsampling each frame

Take a 640 by 480 frame and divide by 16 x16 to yield a 40 by 30 frame to reduce amount of data processing using of m10k ram for next 4 steps. It results in the generation of larger skin patches which decrease the susceptibility of the system to features such as a moustache or spectacles

Step 2 : Threshold Based Skin Detection

We use eq.3 which says U = R – G for 10 < U < 74

The result is a binary image frame

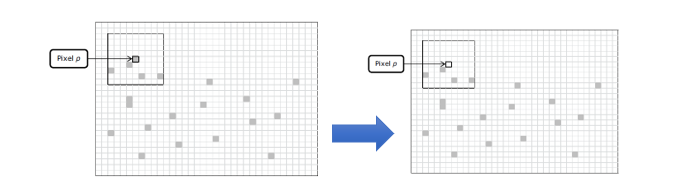

Step 3 : Spatial Filtering

To help with noise reduction we implement a spatial digital filtering algorithm. The filter would take a look at every pixel p, its neighboring pixels in a 9x9 neighborhood is checked. If more than 75% of its neighbors are skin pixels, p was also a skin pixel.

Step 4 : Temporal Filtering

Averaging four consecutive frames over time. If the average is over a set threshold value the average is considered skin, this reduces flickering.

Step 5 : Centroid Computation and Face tracing

For two people, the centroid calculation assumes that they are side by side. This is important because this is the area that will be blurred

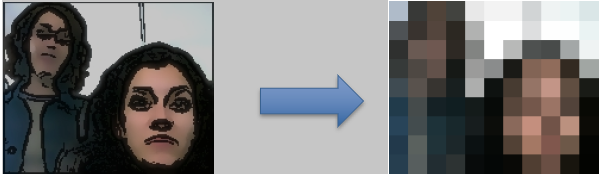

Anonymizer Blurring Algorithm

The anonymizer blurring algorithm uses the binary skin map frame created by averaging, and the skin detection algorithm select pixels determined as skin. For skin pixeled, the anonymizers put out the subsampled or pixilated data and for not skin values, it puts out the actual video data.

Architecture Description

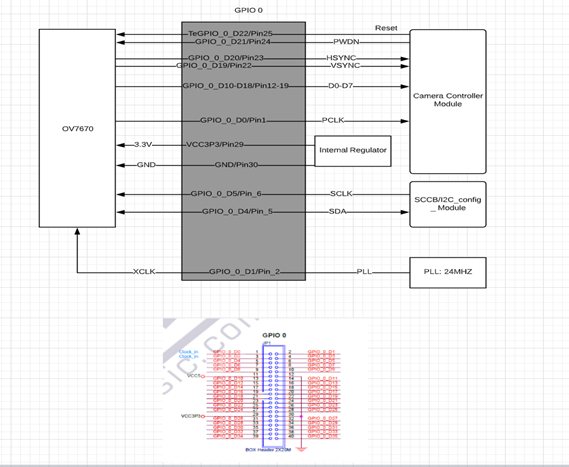

The hardware architecture has the camera interfacing with the DE1-SOC board on which Verilog code is synthesized to implement the design.

Bill Of Materials

|

Name |

Part Number |

Cost |

Unit |

|

Cyclone V SoC |

5CSEMA5F31C6N |

$175 |

1 |

|

VGA Compatible Monitor |

|

$60 |

1 |

|

Camera Module |

OV7670 |

$20 |

1 |

Verilog Development

Before writing any Verilog code, the design was created at a high level as block diagrams and broken into what we believed to be Verilog modules.

Video Pipeline

Using the information in the background section modules were created per required function.

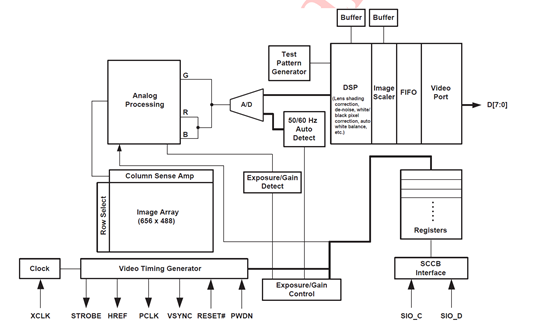

Camera Capture Module

One of the early modules written was a module to configure the settings on the camera and capture incoming color space VGA data.

SDRAM Frame Buffer

Next, we built the frame buffer, which was necessary to store incoming video frames from the camera module. The video frames were expected to rush in as fast at 30 frames per second. This module was partly developed with the help of the altera qsys development platform, which is a standard platform provided for integrating modules. We chose to use qsys here because control logic is required to perform refresh operations, open-row management, and other delays and command sequences for the Sdram. The parts of this module interconnected using qsys were the input and output write and read FIFO which were in sync with the SDRAM

Figure 15 SDRAM Controller

Color Space Converters

Since the data was configured to arrive in YUV422 format, the VGA output expected RGB888 format and were needed to perform the thresholding equations; we need to create a few modules that converted from YUV to RGB format.

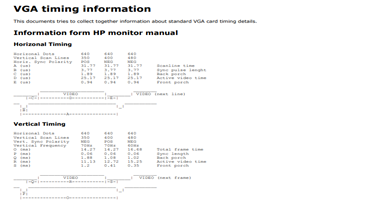

VGA Controller Module

The VGA controller module was strictly in charge of putting VGA compatible data to the video codec on the board.

Anonymizer Module

After we developed all the modules necessary to output video directly out without the blurring effect, we then started to work on the modules that would perform the blurring algorithm and decided to encompass them into a single anonymizer module. The anonymizer module was composed of one giant state machine that ran first order IIR filters to perform the averaging function. The anonymizer module implemented the algorithm discussed in the theory of operation.

Spatial and Temporal Filters

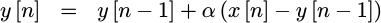

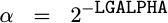

For both filters, a recursive averager type of filter was used to keep an average value at all times, and only adjusts that value with any input.

(4a)

(4b)

The recursive average is a first order infinite impulse response filter (IIR) and was used after the subsampling to compute the average a 16x16 cell block . If we let n be our sample number, with x[n] being out input and y[n] our output. y[n] = y[n-1]- y[n-1](2^(-LGALPHA))) + (2^(-LGALPHA))x[n], x[n] = 0xFFFF and LGALPHA =16. The purpose of this was to average the data over time to produce a high fidelity binary image frame for the mapping of skin pixels.

Skin Pixel Classification

Two criteria's were used to classify pixels as skin pixel. The first was our formula U = R – G for 10 < U < 74 which was remapped to 100 < U < 500 due to the fact that we were using 10 bits to encode the chroma information. The second criterion for classification was based on a threshold set for the spatial and temporal averaging filters discussed in the spatial and temporal filters section. The range of threshold values was from 0x0000 to 0xFFFF which is essentially a fixed-point representation of 0 to 1. For example, if the threshold was set to 0x00FF, then the pixel must the detected as skin on average is at least half (0.5) the time by the spatial and temporal averaging filters. So over time, binary frames are averaged and if the average over time sums up to be greater than 0.5, the image is counted as skin.

References

[1] M. Ooi, "Hardware Implementation for Face Detection on Xilinx Virtex-II FPGA Using the Reversible Component Transformation Color Space," in Third IEEE International Workshop on Electronic Design, Test and Applications, Washington, DC, 2006.

[2] S. Paschalakis and M. Bober, "A Low Cost FPGA System for High Speed Face Detection and Tracking," in Proc. IEEE International Conference on Field-Programmable Technology,Tokyo, Japan, 2003.